New AI Method Lets Robots Get By With a Little Help From Their Friends

Jun 23, 2020 — Atlanta, GA

New artificial intelligence (AI) research is using deep learning to improve the efficiency of communications between AI-enabled agents – like robots, drones, and self-driving cars – that are working together to solve computer vision and perception tasks.

Within the new multi-stage communications deep learning framework developed by researchers from the Machine Learning Center at Georgia Tech, a robot drone with a blocked view of a collapsed building, for example, can query its teammates until it finds one with relevant details to fill in the blanks.

“The current model is for all agents to be talking to all agents at the same time, a fully connected network, via high bandwidth connection, even when it’s not necessary. Our goal is to maximize accuracy of perception tasks, while minimizing bandwidth usage,” said Zsolt Kira, associate director of the Machine Learning Center and an assistant professor in the College of Computing.

For the specific perception task of classifying each pixel of an image, known as semantic segmentation, Kira says that the new framework has achieved this goal by improving accuracy by as much as 20 percent while using just a fourth of the bandwidth required by current state-of-the-art models.

“Rather than communicating all at once, in our model, each agent learns when, with whom, and what to communicate in order to complete an assigned perception task as efficiently as possible,” said Kira, who advises machine learning (ML) Ph.D. student Yen-Cheng Liu, lead researcher on the project.

The new approach limits the amount of communications needed by breaking down the process into three stages within the team’s deep learning framework.

In the request stage, the robot with the blocked view or with a degraded sensor sends an extremely small query to each of its teammates. In the matching stage, the other agents evaluate the query to see if they have relevant information to share with the initiator.

“We refer to this as a handshake mechanism to determine if communication is even needed in the first place and if it is, what information to transmit, and who to send it to,” said Kira.

During the connect stage the initiating robot integrates details provided to it from its teammates to fill the gaps in its own observations. The agent then uses this update to improve its estimates for the overall task.

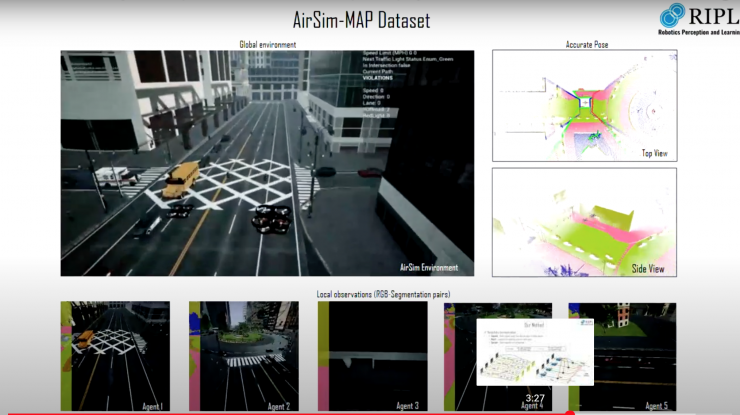

For the study, the research team – which includes ML Ph.D. students Junjiao Tian, and Nathaniel Glaser – developed a training dataset within AirSim, an open-source simulator for drones, cars, and other autonomous or semiautonomous vehicles. The multi-view dataset, known as AirSim-MAP, is based on data gathered from a team of five virtual drones flying through a dynamic landscape. Data captured includes RGB image, depth maps, pose, and semantic segmentation masks for each agent.

“We’ll be releasing this dataset soon to allow other researchers to explore similar problems,” said Kira, director the Robotics Perception and Learning lab, where the study was completed.

The results of the team’s work are being presented virtually this week at the 2020 IEEE International Conference on Robotics and Automation in a paper titled Who2com: Collaborative Perception via Learnable Handshake Communications. The research is supported by a grant from the Office of Naval Research (N00014-18-1-2829.

Albert Snedeker, Sr. Communications Manager